AI-Powered Deepfake Blackmail: The New Frontier of Cyber Extortion

- Jukta MAJUMDAR

- Aug 20, 2025

- 4 min read

JUKTA MAJUMDAR | DATE May 05, 2025

Introduction

Artificial intelligence has ushered in an era of transformative possibilities, but it has also opened the door to unprecedented cyber threats. Among the most disturbing is the rise of AI-powered deepfake blackmail—where synthetic videos or voice recordings are used to impersonate individuals, manipulate perception, and extort victims. These threats are increasingly targeting corporate executives, infiltrating employee communications, and even swaying public opinion or stock markets. As the tools to generate such realistic content become cheaper and easier to use, organizations and individuals must prepare for a reality where visual or audio evidence can no longer be taken at face value.

Understanding Deepfake Blackmail

Deepfake blackmail involves the use of AI-generated media to fabricate a person’s likeness or voice in highly realistic ways. These fakes are then used to deceive, intimidate, or extract value—be it through direct financial extortion, reputational damage, or unauthorized transactions. Unlike traditional cyberattacks, this form of blackmail preys on psychological manipulation and social trust. Fraudsters can create voice clips that mimic a CEO requesting an urgent wire transfer or generate videos that falsely implicate employees in compromising situations. The emotional impact and credibility of such content often lead victims to act before verifying authenticity.

Real-World Examples That Illustrate the Threat

In one notable case, cybercriminals used voice cloning software to imitate the CEO of a UK-based energy firm. The company’s financial officer, believing he was following the boss’s verbal instruction, transferred over €220,000 to a fraudulent account. The voice used had mimicked the CEO’s accent, tone, and speech patterns with such precision that it raised no suspicion.

Another instance involved a deepfake video of a political leader making offensive remarks, which briefly went viral before being debunked. Despite the truth coming out, the damage was done, as public sentiment was momentarily manipulated and financial markets reacted.

In a more personal and invasive attack, employees at a global enterprise received emails containing what appeared to be explicit videos of themselves. These videos were AI-generated composites, yet convincing enough to cause panic. Attackers demanded payment in cryptocurrency to withhold public release. Although the content was fake, the reputational risk and psychological distress were very real.

Why Deepfakes Are So Difficult to Combat

One of the primary dangers of deepfakes is their realism. As technology advances, distinguishing between genuine and synthetic content becomes increasingly difficult without advanced forensic tools. These attacks exploit human emotions and trust rather than technical vulnerabilities, making them difficult to prevent using traditional cybersecurity solutions. Additionally, deepfakes can be reused across multiple attack vectors, making the cost of production worthwhile for cybercriminals who wish to launch large-scale operations.

Mitigation Strategies for Organizations

Organizations must act swiftly and strategically to defend against deepfake-enabled cyber extortion. One of the most effective measures is investing in AI-based deepfake detection tools. These tools can analyze digital media for manipulation artifacts such as inconsistent lighting, unnatural facial movements, or voice anomalies. Additionally, companies should enforce strict multi-factor verification for sensitive requests—especially financial transactions—to ensure that no action is taken based on a single mode of communication.

Employee education is also vital. Cybersecurity training programs must include modules on identifying synthetic media and recognizing suspicious behavior. Staff, especially those in finance or leadership positions, should be taught to pause and verify instructions that seem urgent or emotionally charged. Furthermore, organizations should develop a crisis response plan that includes legal, communication, and cybersecurity protocols in the event of a deepfake incident. Knowing how to respond rapidly and publicly can minimize damage and restore trust. Finally, companies should advocate for clearer legal frameworks around deepfake misuse and support regulatory efforts to combat synthetic media abuse across digital platforms.

Conclusion

The era of AI-powered deepfake blackmail is here, and it is reshaping how cyber extortion is carried out. No longer limited to email scams or stolen credentials, attackers now use artificial intelligence to impersonate voices, faces, and identities with terrifying accuracy. As deepfakes become harder to detect and more psychologically manipulative, organizations must adopt a proactive, multilayered defense. This means integrating detection tools, refining verification procedures, and ensuring that both leadership and staff are prepared to confront synthetic threats head-on. In the age of AI, reality can be manufactured, and only vigilance, education, and intelligent systems can prevent deception from becoming disaster.

Citations

Business Insider. (2025, May 3). The clever new scam your bank can't stop. https://www.businessinsider.com/bank-account-scam-deepfakes-ai-voice-generator-crime-fraud-2025-5

Financial Times. (2025, May 3). The rise of deepfake scams—and how not to fall for one. https://www.ft.com/content/fcbdc88f-bbfd-4338-915a-9ef7970b2123

Trend Micro. (2019, September 3). Unusual CEO fraud via deepfake audio steals US$243,000 from U.K. company. https://www.trendmicro.com/vinfo/us/security/news/cyber-attacks/unusual-ceo-fraud-via-deepfake-audio-steals-us-243-000-from-u-k-company

Global Initiative Against Transnational Organized Crime. (2023). Criminal exploitation of deepfakes in South East Asia. https://globalinitiative.net/analysis/deepfakes-ai-cyber-scam-south-east-asia-organized-crime/

Image Citations

Broadcom News & Stories. (2023, March 16). VMware report warns of deepfake attacks and cyber extortion. Broadcom News and Stories. https://news.broadcom.com/releases/vmware-report-warns-of-deepfake-attacks-and-cyber-extortion

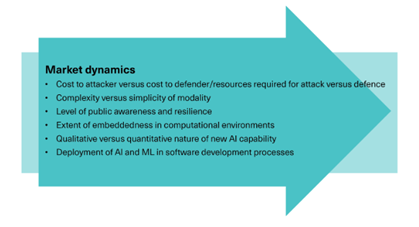

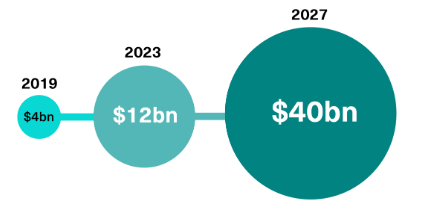

Burton, J., Janjeva, A., & Moseley, S. (n.d.). AI and Serious Online Crime. Centre for Emerging Technology and Security. https://cetas.turing.ac.uk/publications/ai-and-serious-online-crime

Comments